With 8.2(.1) Servoy supports the ability to broadcast our own broadcast messages through an AMQP message bus. Or any kind of other system by using the new pure server api of IServerAcces through the IDataNotifyService and IDataNotifyListener that the broadcaster plugin is using.

The default "broadcaster" plugin that Servoy ships with has currently one option: setting the hostname of a RabbitMQ installation on all of the installed Servoy servers (WAR deployments) that want to have data broadcasting enabled between them. That RabbitMQ installation hostname can be specified via property ' amqpbroadcaster.hostname ' in servoy.properties.

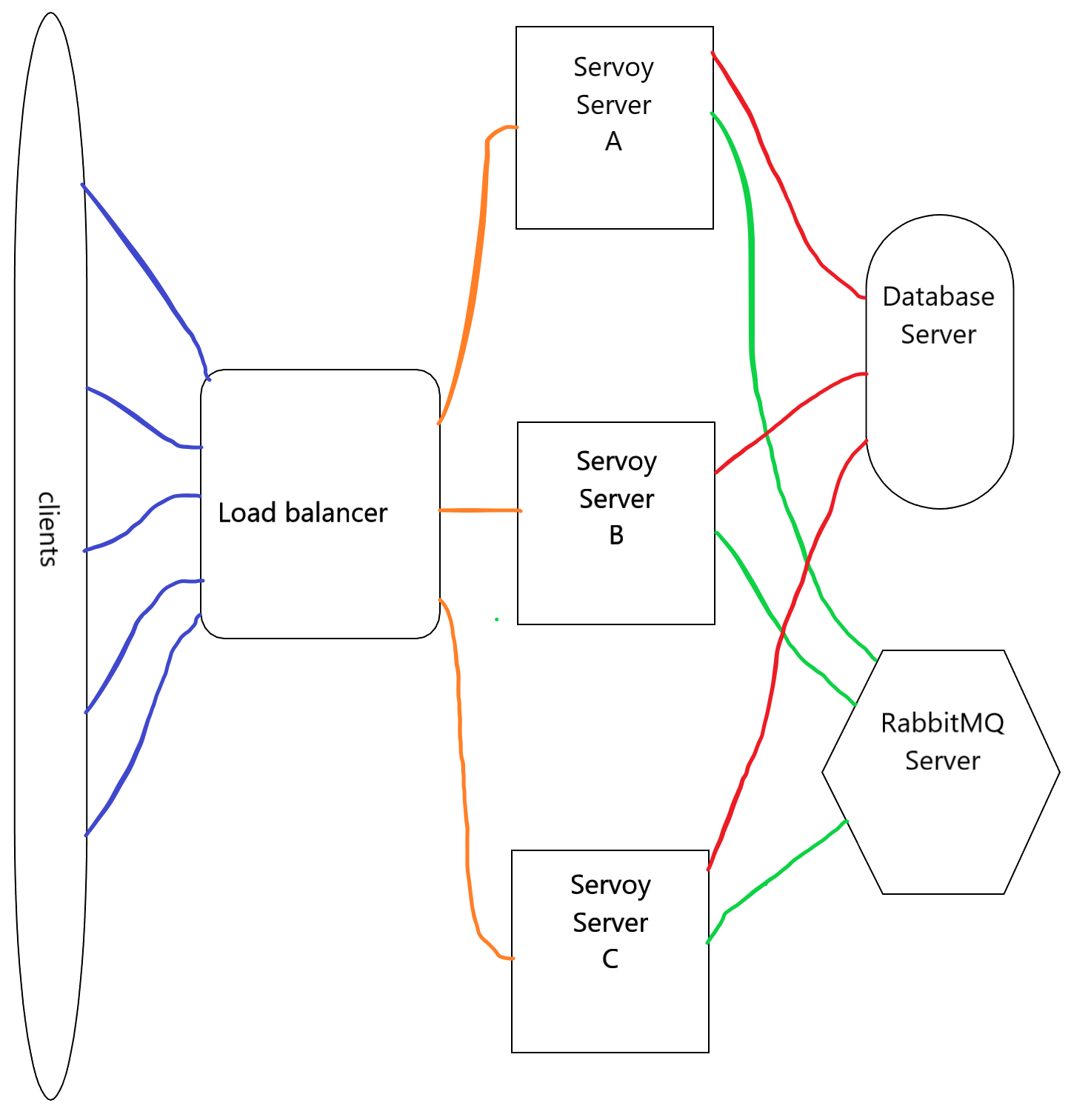

Below there is a setup of 3 servoy servers talking to the same database and using a RabbitMQ server to synchronize the databroadcast messages.

In this setup it is better that every Servoy server does have its own repository database, this way you can upgrade one without touching anything else.

Things that are not supported in this setup are:

- Pure Servoy record locks (this has to be a database lock (servoy.record.lock.lockInDB must be true and supported by the database)

- Servoy sequences.

With the above approach you have scaling and also zero downtime deployments because you can put one of the servers in maintenance mode and wait until all the clients are gone from that one, then upgrade that system by deploying a new WAR and turn it back on out of maintenance mode.

The load balancer should understand the maintenance mode by catching 503 (SC_SERVICE_UNAVAILABLE) response message and redirect it then to another.

For just zero downtime deployments you can use a simpler setup by just using the Tomcat Parallel deployment. Then you only have 1 tomcat server with 1 WAR talking to a RabbitMQ server. Then when upgrading make a new WAR with a higher version and deploy that on the same tomcat. Then tomcat will make sure that the new sessions end up in the latest version and older clients still work on the previous version. The MQ server will make sure both versions are seeing all the Servoy databroadcast notifications.

For NGClient you have to turn on the setting 'servoy.ngclient.useHttpSession' (Servoy 8.2.1) on the admin page for this setup. So that even for the websockets a http session is created. This way Tomcat or the Loadbalancer know that an incoming request is meant for Server C or for an older version in parallel deployment. Also maintenance mode works then a bit different for NGClient because the initial call to the "index.html" page will already return a 504 error response code, if there is already an NGClient for that session it will pass and will or reuse the same client (if it was a page refresh) or it will then report to the user that the server is in maintenance mode.

A user needs to stop all the ngclient browser tabs (all ngclients on the server for that user needs to terminate) before that user will be moved to the a new version or another server.

A Servoy developer can use the new clientmanager plugin to see which client uses what httpsession id, this information is stored in the client info that starts with "httpsessionid:", this info is also shown on the admin page.